diff --git a/README.md b/README.md

index e19ef6c39d..ca8ce06d22 100644

--- a/README.md

+++ b/README.md

@@ -21,31 +21,32 @@ limitations under the License.

DeepSparse

Sparsity-aware deep learning inference runtime for CPUs

-

-[DeepSparse](https://github.com/neuralmagic/deepsparse) is a CPU inference runtime that takes advantage of sparsity to accelerate neural network inference. Coupled with [SparseML](https://github.com/neuralmagic/sparseml), our optimization library for pruning and quantizing your models, DeepSparse delivers exceptional inference performance on CPU hardware.

+## 🚨 2025 End of Life Announcement: DeepSparse, SparseML, SparseZoo, and Sparsify

+

+Dear Community,

+

+We’re reaching out with heartfelt thanks and important news. Following [Neural Magic’s acquisition by Red Hat in January 2025](https://www.redhat.com/en/about/press-releases/red-hat-completes-acquisition-neural-magic-fuel-optimized-generative-ai-innovation-across-hybrid-cloud), we’re shifting our focus to commercial and open-source offerings built around [vLLM (virtual large language models)](https://www.redhat.com/en/topics/ai/what-is-vllm).

+

+As part of this transition, we have ceased development and will deprecate the community versions of **DeepSparse (including DeepSparse Enterprise), SparseML, SparseZoo, and Sparsify on June 2, 2025**. After that, these tools will no longer receive updates or support.

+

+From day one, our mission was to democratize AI through efficient, accessible tools. We’ve learned so much from your feedback, creativity, and collaboration—watching these tools become vital parts of your ML journeys has meant the world to us.

+

+Though we’re winding down the community editions, we remain committed to our original values. Now as part of Red Hat, we’re excited to evolve our work around vLLM and deliver even more powerful solutions to the ML community.

+

+To learn more about our next chapter, visit [ai.redhat.com](ai.redhat.com). Thank you for being part of this incredible journey.

+

+_With gratitude, The Neural Magic Team (now part of Red Hat)_

+

+## Overview

+

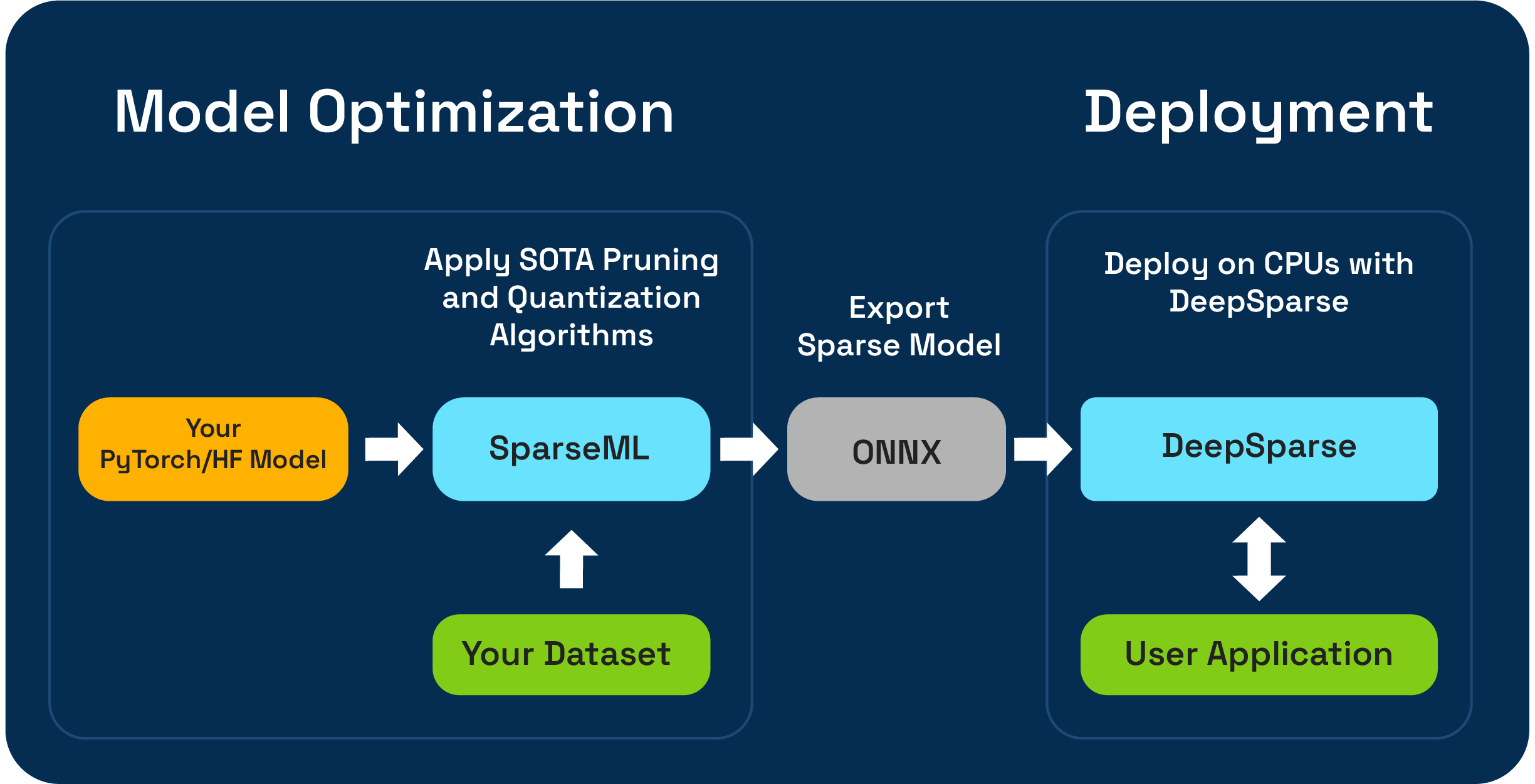

+DeepSparse is a CPU inference runtime that takes advantage of sparsity to accelerate neural network inference. Coupled with [SparseML](https://github.com/neuralmagic/sparseml), our optimization library for pruning and quantizing your models, DeepSparse delivers exceptional inference performance on CPU hardware.

-## ✨NEW✨ DeepSparse LLMs

+## DeepSparse LLMs

Neural Magic is excited to announce initial support for performant LLM inference in DeepSparse with:

- sparse kernels for speedups and memory savings from unstructured sparse weights.

@@ -208,26 +209,12 @@ export NM_DISABLE_ANALYTICS=True

Confirm that telemetry is shut off through info logs streamed with engine invocation by looking for the phrase "Skipping Neural Magic's latest package version check."

-## Community

-

-### Get In Touch

-

-- [Contribution Guide](https://github.com/neuralmagic/deepsparse/blob/main/CONTRIBUTING.md)

-- [Community Slack](https://neuralmagic.com/community/)

-- [GitHub Issue Queue](https://github.com/neuralmagic/deepsparse/issues)

-- [Subscribe To Our Newsletter](https://neuralmagic.com/subscribe/)

-- [Blog](https://www.neuralmagic.com/blog/)

+## License

-For more general questions about Neural Magic, [complete this form.](http://neuralmagic.com/contact/)

-

-### License

-

-- **DeepSparse Community** is free to use and is licensed under the [Neural Magic DeepSparse Community License.](https://github.com/neuralmagic/deepsparse/blob/main/LICENSE)

+**DeepSparse Community** is free to use and is licensed under the [Neural Magic DeepSparse Community License.](https://github.com/neuralmagic/deepsparse/blob/main/LICENSE)

Some source code, example files, and scripts included in the DeepSparse GitHub repository or directory are licensed under the [Apache License Version 2.0](https://www.apache.org/licenses/LICENSE-2.0) as noted.

-- **DeepSparse Enterprise** requires a Trial License or [can be fully licensed](https://neuralmagic.com/legal/master-software-license-and-service-agreement/) for production, commercial applications.

-

-### Cite

+## Cite

Find this project useful in your research or other communications? Please consider citing: