Prompt Engineering, Solve NLP Problems with LLM's & Easily generate different NLP Task prompts for popular generative models like GPT, PaLM, and more with Promptify

This repository is tested on Python 3.7+, openai 0.25+.

You should install Promptify using Pip command

pip3 install promptifyor

pip3 install git+https://github.com/promptslab/Promptify.gitTo immediately use a LLM model for your NLP task, we provide the Pipeline API.

from promptify import Prompter,OpenAI, Pipeline

sentence = """The patient is a 93-year-old female with a medical

history of chronic right hip pain, osteoporosis,

hypertension, depression, and chronic atrial

fibrillation admitted for evaluation and management

of severe nausea and vomiting and urinary tract

infection"""

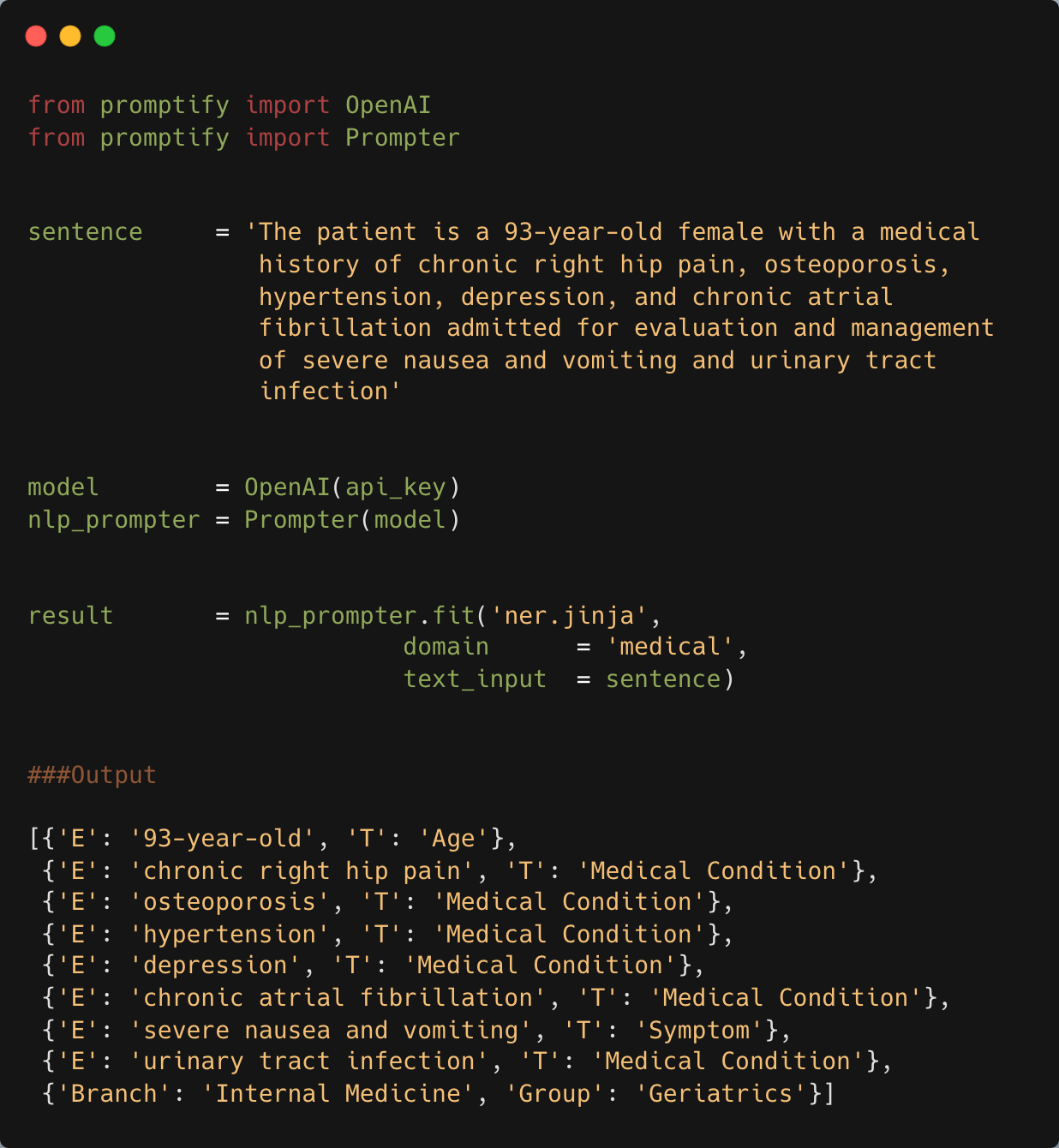

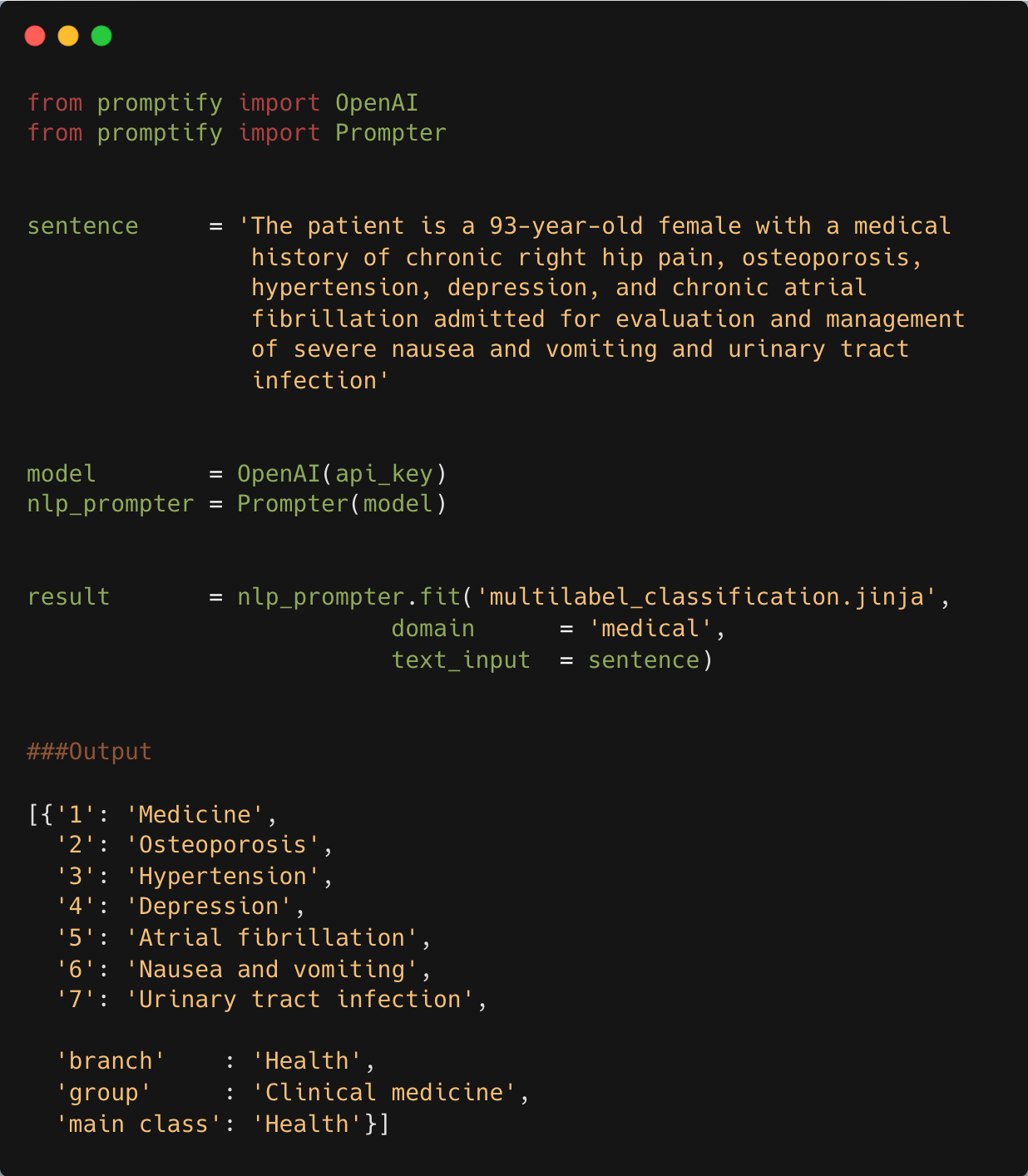

model = OpenAI(api_key) # or `HubModel()` for Huggingface-based inference or 'Azure' etc

prompter = Prompter('ner.jinja') # select a template or provide custom template

pipe = Pipeline(prompter , model)

result = pipe.fit(sentence, domain="medical", labels=None)

### Output

[

{"E": "93-year-old", "T": "Age"},

{"E": "chronic right hip pain", "T": "Medical Condition"},

{"E": "osteoporosis", "T": "Medical Condition"},

{"E": "hypertension", "T": "Medical Condition"},

{"E": "depression", "T": "Medical Condition"},

{"E": "chronic atrial fibrillation", "T": "Medical Condition"},

{"E": "severe nausea and vomiting", "T": "Symptom"},

{"E": "urinary tract infection", "T": "Medical Condition"},

{"Branch": "Internal Medicine", "Group": "Geriatrics"},

]

- Perform NLP tasks (such as NER and classification) in just 2 lines of code, with no training data required

- Easily add one shot, two shot, or few shot examples to the prompt

- Handling out-of-bounds prediction from LLMS (GPT, t5, etc.)

- Output always provided as a Python object (e.g. list, dictionary) for easy parsing and filtering. This is a major advantage over LLMs generated output, whose unstructured and raw output makes it difficult to use in business or other applications.

- Custom examples and samples can be easily added to the prompt

- 🤗 Run inference on any model stored on the Huggingface Hub (see notebook guide).

- Optimized prompts to reduce OpenAI token costs (coming soon)

| Task Name | Colab Notebook | Status |

|---|---|---|

| Named Entity Recognition | NER Examples with GPT-3 | ✅ |

| Multi-Label Text Classification | Classification Examples with GPT-3 | ✅ |

| Multi-Class Text Classification | Classification Examples with GPT-3 | ✅ |

| Binary Text Classification | Classification Examples with GPT-3 | ✅ |

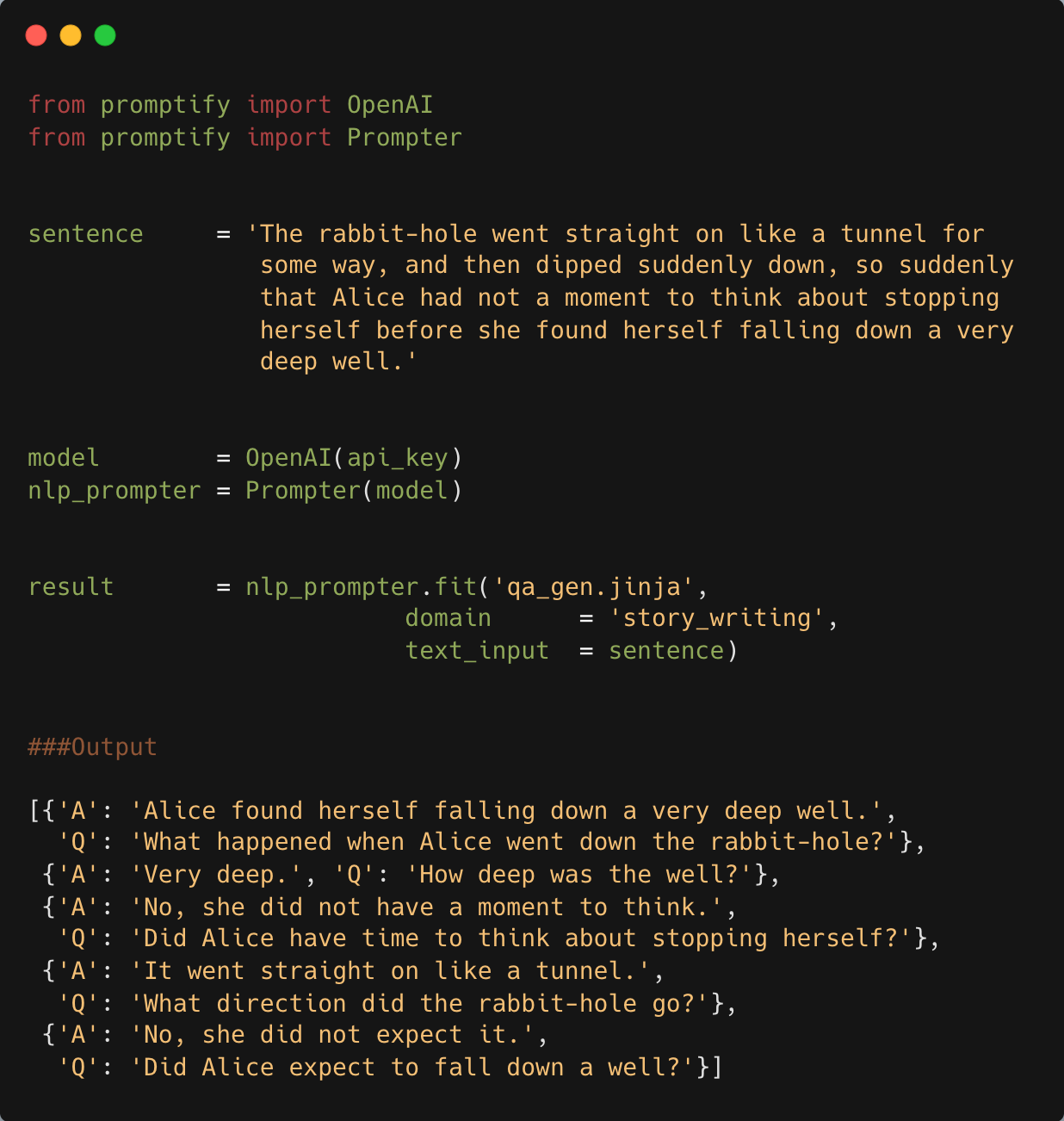

| Question-Answering | QA Task Examples with GPT-3 | ✅ |

| Question-Answer Generation | QA Task Examples with GPT-3 | ✅ |

| Relation-Extraction | Relation-Extraction Examples with GPT-3 | ✅ |

| Summarization | Summarization Task Examples with GPT-3 | ✅ |

| Explanation | Explanation Task Examples with GPT-3 | ✅ |

| SQL Writer | SQL Writer Example with GPT-3 | ✅ |

| Tabular Data | ||

| Image Data | ||

| More Prompts |

@misc{Promptify2022,

title = {Promptify: Structured Output from LLMs},

author = {Pal, Ankit},

year = {2022},

howpublished = {\url{https://github.com/promptslab/Promptify}},

note = {Prompt-Engineering components for NLP tasks in Python}

}

We welcome any contributions to our open source project, including new features, improvements to infrastructure, and more comprehensive documentation. Please see the contributing guidelines